Introduction

The rise of artificial intelligence (AI) demands ever-greater volumes of computing, storage and network capacity—and that in turn places enormous pressure on the infrastructure of conventional data centres. On Earth, data centre operators grapple with rising electricity demand, cooling and water constraints, limited land footprints, and an increasing carbon-emissions burden.

In response, a radical proposition has emerged: move data centres into space (orbit or high-altitude environments) to exploit abundant solar energy and ultra-efficient cooling environments. But is this truly a viable “best approach” for solving the energy-and-efficiency challenge for AI, or merely a speculative future? This article explores the promise, the limitations, and the strategic implications of space-based data centres for AI workloads.

The Case for Space-Based Data Centres

Uninterrupted Solar Energy & Reduced Cooling Load

One of the biggest operational costs for terrestrial data centres is power (for compute + cooling + facility overhead). According to the International Energy Agency (IEA), cooling systems alone can represent 7 % to over 30 % of electricity usage for a data centre, depending on efficiency.

In space, two significant advantages present themselves:

A solar array in orbit receives essentially uninterrupted sunlight (no night/day cycles, weather, clouds) and thus can generate more energy per square metre than on Earth.

The vacuum of space and extreme coldness of deep-space (or the shadow of Earth) allows for dramatically lower cooling overheads compared to traditional data centre cooling loops.

For example, a recent analysis found that space-based data centres could generate solar energy at “upwards of 40 % more efficiency than on Earth” and produce “around 10 times lower emissions” when including launch impacts.

AI Workloads and Infrastructure Pressure

AI training, inference, edge-AI and global cloud services are driving demand for data centre compute. Some studies suggest AI-related demand may drive a ~165 % increase in electricity for data centres by 2030.

Thus, the infrastructure must evolve—not just in hardware architecture (GPUs/TPUs) but in site architecture: energy sourcing, location, cooling architecture, latency/distribution trade-offs.

Space-based facilities present a way to decouple compute growth from Earth’s grid, land, water and cooling constraints.

Strategic/Regulatory Benefits

Beyond energy and cooling, space-based data centres may offer other strategic advantages:

Data sovereignty: placing compute in orbit may mitigate some regional jurisdiction issues.

Reduced competition for land, water, and utility connections which are increasingly constrained on Earth

Potentially globally accessible “edge” computing platforms with unique geometry (satellite links)

Major Technical & Economic Challenges

Launch, Logistics & Lifecycle Costs

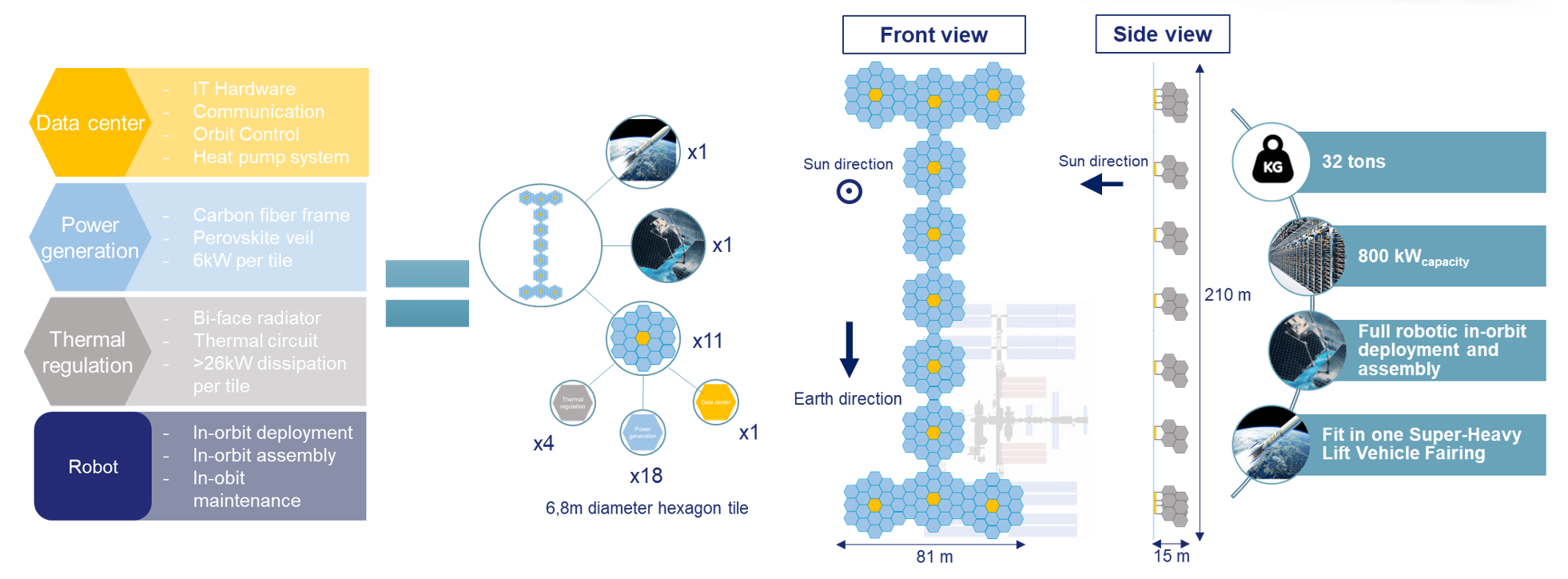

Getting heavy compute hardware, cooling/radiator systems, solar arrays and support infrastructure into orbit is extremely expensive and risky. For instance, one estimate suggests building a gigawatt-scale orbital data centre may cost tens of billions of dollars and require millions of tonnes of solar panels.

Even if solar generation is 40 % more efficient, you must amortise the launch, assembly, radiation-hardening, maintenance and space-supply chain costs.

Latency and Network Connectivity

Orbiting or high-altitude facilities may face long network paths, higher latency, and limited bandwidth compared to terrestrial fibre. For AI workloads especially (distributed training, parameter synchronisation, massive data ingestion) the communication topology is critical. The Google Research blog noted that delivering performance comparable to terrestrial data centres “requires links between satellites that support tens of terabits per second.”

Maintenance, Upgradability and Hardware Refresh

Data centres evolve: hardware refreshes every few years, failure rates, modular upgrades, physical maintenance (cooling, power, network). In space, maintenance is far harder. Hardware failures may be more costly or impossible to service. Upgrading for next‐gen accelerators (TPUs/GPUs) becomes a long lead time and high cost.

Thermal Management & Thermal Radiators

Although cooling in space seems “free,” you still need radiators to dump heat into space and moderate thermal cycling. Heat dissipation in vacuum requires design complexity. You’re still constrained by physics.

Energy Storage, Power Variability & Resilience

While solar is abundant, you still need storage (batteries, other) for eclipses, orbital night, or station‐keeping. Terrestrial grids also provide redundancy, backups, dynamism. The space alternative must match uptime, disaster recovery, operational reliability.

Is It a New “Best Approach”? Conditions & Hybrid View

When It Makes Sense

Space-based data centres may be particularly well‐suited when:

The workload is extremely high density (GPU/TPU clusters) where Earth constraints (cooling, land, grid) become binding.

The application can tolerate higher latency or is location‐agnostic (e.g., large model training in batch) rather than ultra-low latency user‐facing services.

There is a willingness to invest in long‐lead, high‐capex infrastructure with future payoff (10-20 year horizon).

The architecture is globally distributed anyway, and the “edge” is conceptualised in orbit or high altitude rather than terrestrial “edge”.

Hybrid & Transitional Reality

In the near and medium term, the “best approach” is likely hybrid: terrestrial data centres with aggressive efficiency (renewables, immersion cooling, modular design) combined with high-altitude or orbital prototypes for specific workloads.

As one analysis suggests: “data centre-enabled High Altitude Platforms (HAPs) … can save up to ~12-14 % of electricity cost” in certain cases.

That indicates orbital may be part of the solution—not necessarily a wholesale replacement of earth-based facilities.

Key Metrics to Evaluate

When assessing space vs terrestrial, look at:

Power Usage Effectiveness (PUE) and full life-cycle energy and emissions (including launch).

Total cost of ownership over hardware lifetime (including upgrades).

Latency and network bandwidth constraints for the workload.

Maintainability, risk, serviceability of hardware.

Regulatory, data‐sovereignty and security implications.

Scalability: how fast can one scale orbiting capacity compared to earth-based hyperscale.

Conclusion

The concept of building data centres in space to power AI workloads is no longer pure science fiction. The technical feasibility is increasingly credible and the drivers (energy scarcity, cooling burdens, water limitations, land constraints, AI compute demands) are real and pressing.

However, declaring space‐based data centres as the definitive “best” approach at this time would be premature. The economics, logistics, network constraints, maintenance challenges and latency trade-offs are significant. For most organisations and workloads today, optimising terrestrial infrastructure remains the pragmatic best—including renewable energy sourcing, advanced cooling, modular design, location optimisation and workload distribution.

That said, for the next generation of ultra-scale AI systems, where compute densities are extreme and Earth’s infrastructure runs into physical limits, space (or high-altitude counterparts) may well become the strategic frontier. Organisations should begin today to examine their long‐term infrastructure strategy, factoring in when and how to shift parts of their AI compute footprint beyond Earth.

In short:

Yes — space-based data centres could be one of the best long-term solutions for the energy and efficiency problem of AI.

But it is conditional, specialized, and complementary, not a wholesale replacement of terrestrial data centres—for now.

In the evolving architecture of AI compute infrastructure, space is not a distraction, but a strategic horizon. The wise enterprise begins aligning today.