From Algorithmic Logic to Cognitive Self-Awareness

Introduction

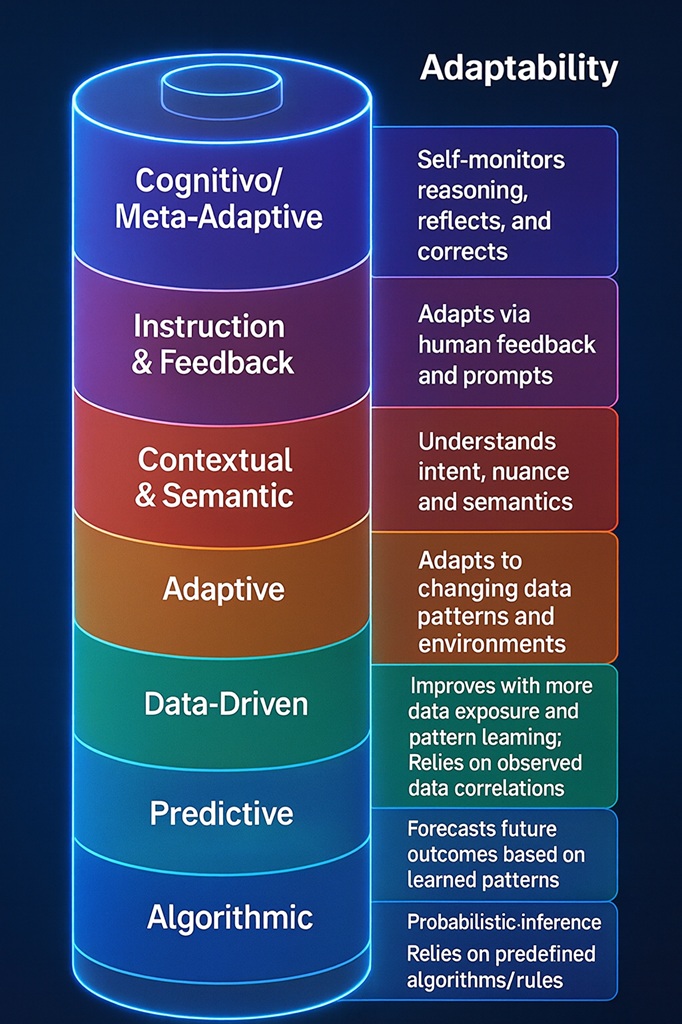

Artificial Intelligence has evolved far beyond simple data analysis. What began as rule-based automation has matured into adaptive, context-aware, and cognitively reflective systems. To capture this evolution, the Seven Levels of AI Adaptability offer a structured way to understand how intelligence progresses from mechanical algorithms to systems that can monitor and correct their own reasoning.

This model—visualized as an “adaptability battery”—measures not size or speed, but awareness and flexibility. Each level stacks on the previous one, expanding how an AI perceives data, interprets context, incorporates feedback, and ultimately regulates its own cognition.

1) Algorithmic — The Foundation of Logic

At the base lies the Algorithmic level. Systems here rely on predefined rules and deterministic procedures (and, where applicable, simple probabilistic inference). Given the same inputs, they produce the same outputs.

Classic software programs, rule engines, and early expert systems belong here. Their adaptability is effectively zero—they execute exactly as coded. This is automation without intelligence: fast, precise, and controllable, but rigid. When confronted with ambiguity or novelty, these systems cannot meaningfully adjust beyond the rules they were given.

2) Predictive — Forecasting What Comes Next

Above pure rules sits Predictive capability: models that forecast future outcomes based on learned patterns. Using statistical learning, time-series analysis, and deep nets, they anticipate what is likely to happen.

Examples include risk scoring, demand forecasting, and failure prediction. Predictive systems are proactive compared with simple analytics; they move beyond describing the past to projecting the future. Yet they still inherit the limits of their training signal: when the world shifts, forecasts degrade unless the model or data are refreshed.

3) Data-Driven — Improving with Exposure

The Data-Driven level emphasizes performance improvement through ongoing data exposure. Systems here learn correlations and patterns more effectively as they see more (and more diverse) data. In practice, this looks like continuous model retraining, feature enrichment, and feedback loops that raise accuracy over time.

The key distinction from Predictive: Predictive describes the function (forecasting), while Data-Driven describes the learning posture (improving with more data and pattern learning). A system can be predictive without being truly data-driven (e.g., a static model shipped and never retrained), and a data-driven system may power tasks beyond forecasting (classification, ranking, retrieval, etc.).

4) Adaptive — Responding to Change in Real Time

Adaptive systems adjust to changing data patterns and environments with minimal human intervention. They detect drift, recalibrate thresholds, update policies, or switch strategies online.

Recommendation engines that reweight signals as tastes shift, fraud detectors that harden as adversaries evolve, or control systems that tune parameters on the fly all live here. The essence is resilience under change: performance is maintained not by manual retrains alone but by built-in mechanisms that react to live feedback.

5) Contextual & Semantic — Understanding Meaning and Intent

A major step occurs when systems evolve from adapting to understanding. Contextual & Semantic capability lets models grasp intent, nuance, and meaning, not just surface correlations.

Modern LLMs exemplify this layer. They parse discourse, resolve references, and reason across context to infer what was meant, not only what was said. This is where statistical learning fuses with symbolic cues and world knowledge, enabling more natural collaboration and more accurate instruction following across varied situations.

6) Instruction & Feedback — Learning Through Alignment

At this level, models adapt via human feedback and prompts. Instruction-tuned systems incorporate reinforcement learning from human feedback (RLHF), preference optimization, policy constraints, and governance rules. They adjust tone, safety behavior, and task strategies based on explicit instructions, corrections, or organizational policies.

This is where raw capability becomes aligned capability. The system learns what its human partners value and conforms its outputs accordingly, turning a powerful tool into a governed collaborator.

7) Cognitive / Meta-Adaptive — Self-Monitoring and Correction

At the top sits Cognitive / Meta-Adaptive intelligence: systems that self-monitor their reasoning, reflect, and correct. They maintain uncertainty budgets, check intermediate steps, detect contradictions or bias, and choose when to escalate, abstain, or ask for help.

Architectures such as agent frameworks and governed scaffolds (e.g., GSCP-style pipelines) embody meta-cognition: reasoning about reasoning. These systems can inspect their own plans, revise them, and apply ethical or policy constraints autonomously—foundations for cognitive governance and safe, accountable autonomy.

From Computation to Cognition

These seven levels trace a path from syntactic computation to semantic understanding and finally to reflective governance. Each level is additive. Algorithmic reliability remains crucial even as higher layers introduce prediction, learning posture, adaptation, meaning, alignment, and self-regulation. Together they form a cumulative spectrum—from rules to self-awareness.

Real-World Implications

Enterprises can treat the model as an adaptability maturity framework: baseline critical workflows at Algorithmic/Predictive, invest in Data-Driven and Adaptive loops for resilience, introduce Contextual & Semantic understanding where language and nuance matter, enforce Instruction & Feedback for policy alignment, and pilot Cognitive/Meta-Adaptive controls for auditable autonomy.

Researchers can map model evolution against distinct capabilities—forecasting accuracy (Predictive), data-efficiency and continual learning (Data-Driven/Adaptive), semantic generalization (Contextual), human preference fit (Instruction & Feedback), and introspective reliability (Meta-Adaptive).

Policy Makers gain a vocabulary to scope guardrails: what “autonomy” means at each level, what audits are needed, and which capabilities trigger stronger oversight.

Developers can prioritize engineering investments: instrumentation for drift (Adaptive), retrieval + tool use for semantics (Contextual), preference optimization and governance hooks (Instruction & Feedback), and introspection/verification layers (Meta-Adaptive).

Conclusion

AI’s future will be defined less by parameter counts than by the depth of adaptability. As systems climb from rules to reflection—Algorithmic → Predictive → Data-Driven → Adaptive → Contextual & Semantic → Instruction & Feedback → Cognitive / Meta-Adaptive—they become governed collaborators that can understand, align, and self-correct.

The Adaptability Battery captures that ascent: a practical map for building systems that don’t just compute, but understand, improve, and responsibly regulate themselves.