Advanced Index In MongoDB - Part Six

- Introduction to MongoDB - Part One

- Insert, Update and Delete Document in MongoDB - Part Two

- Query On Stored Data In MongoDB - Part Three

- Advance Query Search On MongoDB - Day Four

- Index on MongoDB - Day Five

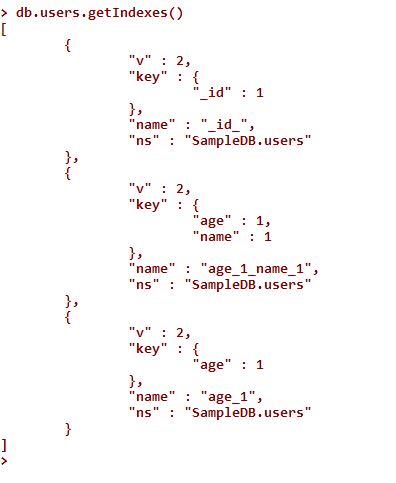

As we already discussed in the previous article, if we want to create new indexes then we need to use the ensureIndex command function. In this process, a new index will be created only when it was not existing for that collection. Normally, every collection must contain one index. So, when we create an index against a collection in MongoDB, at that time, all the information related to the indexes is normally stored in the system.indexes collections which are basically a reserved collection. Due to this, we can’t modify or delete any documents within this collection. So, if we want to view the metadata related information of an index in any collection, then we need to run the below command in the console to view the result, since without a collection name, the index can’t be created. That’s why we need to specify the collection name in this command.

- db.collectionName.getIndexes()

This command will be provided with all the information related to the index against that collection. The most important part of the output of the above is the field's name like “key” and “name”. The key fields can be used to max, min or any other aggregate functions where we can use the index.

In MongoDB, each index within a collection must contain a unique name which represents the index.

Capped CollectionSince in MongoDB, both sides of scaling is possible means both horizontal scaling and vertical scaling. So, in the case of normal collections, the size of the collection will be increased automatically as per the data volume after the automatic creation of the collections. But in MongoDB, there is also another type of collection named Capped Collection. The specialty of this type of collection is it needs to be created in advance (means MongoDB will not be created this collection automatically) and also during create this collection we need to provide the size of the collections. Now, in this case, there will a question related to the vertical scaling of the collections. That is what will be happened when we try to insert new records or data when the size of the collection is totally full? The answer of this question is that since a capped collection is always a fixed size, so when it is full and we try to insert a new record, then the oldest records or documents will be deleted and in place of that new document or records will take place.

The capped collection also contains some more restrictions like we can’t delete or remove any document from capped collections. Documents will be deleted only when it is aged out and new records need to insert in that collections. Capped collection always maintains a different pattern of access within the collections i.e. data is always inserted in a sequence within a fixed section of the disk storage. This type of collections mainly useful for storing logging information.

Since we already told that Capped collections need to be created explicitly before the use. The below command is used to create a capped collection with the size of 100000 bytes.

- db.createCollection("LogHistory", {"capped" : true, "size" : 1000000});

This command i.e. cretaeCollection can also be used to specify the document no so that we can limit the total document no inserted within the capped collection.

- db.createCollection("LogHistory", {"capped" : true, "size" : 1000000, "max":200});

In the above command, we specify a max value of 200 which means this collection can be aged out when total number of the inserted document is 200 although the total size of the document is less than which mention. So, the capped collection will be aged out on basis of any one of size or max value which one is reached first. Once a capped collection is created, we can’t be able to modify that capped collection anymore.

TTL Index (Time-To-Leave Index)

As discussed in the above section, capped collections always give us a limited control because we can’t control the data written logic. So, if we need to some more flexibility, there is another type of process available in the MongoDB which called TTL (Time-To-Leave) index. So, this type of index can be created on a normal collection which is normally automatically created by the MongoDB and both scaling is possible. In this index definition, we can define the timeout value for each document. When that particular document reaches to the extreme age, then that document will be deleted. If we want to create a TTL index, then we need to use an expireAfterSecs option in the ensureIndex() method function. Suppose, we want to create a TTL index, which will provide a 24-hour time span for each and every document, then the definition of the index creation is as below –

- db.users.ensureIndex({"created" : 1}, {"expireAfterSecs" : 60*60*24})

This command creates a TTL index on the created field on users collection. So, the user document will be removed once the server time is cross the expireAfterSecs value against the document data. MongoDB normally checks the TTL index once per minute-based schedule. So we do not need to worry about second wise granular control.

Full-Text Index

MongoDB incorporates a special style of the index for sorting out text among documents. In previous articles, we’ve queried for strings victimization actual matches and regular expressions, but these techniques have some limitations. looking out an outsized block of text for a daily expression is slow and it’s powerful to require linguistic problems into consideration (e.g., that “entry” should match “entries”). Full-text indexes provide you with the flexibility to go looking text quickly, as well as offer constitutional support for multi-language stemming and stop words. While all indexes are costly to make, full-text indexes are significantly heavyweight. Creating a full-text index on a busy assortment will overload MongoDB, thus adding this type of index must always be done offline or at a time once performance doesn't matter. you ought to be cautious of making full-text indexes which will not slot in RAM (unless you have SSDs). See Chapter eighteen for additional info on making indexes with the lowest impact on your application.

Full-text search will incur additional severe performance penalties on writes than “normal” indexes since all strings should be split, stemmed, and keep during a few places. Thus, you will tend to examine poorer write performance on full-text-indexed collections than on others. it'll conjointly impede information movement if you're sharing: all text should be re-indexed when it's migrated to a brand-new piece.

Since, till now full text search index are an experimental stage in case of MongoDB, so we need to enable textSearch featured with the setParameter Commands.

- db.adminCommand({"setParameter" : 1, "textSearchEnabled" : true})

So, if we want run the search on the text field, then we first need to create a text index as below –

- db.blog.ensureIndex({"date" : 1, "post" : "text"})

Now, if we want to use this index, then we must need to use text command for that –

- db.runCommand({text: "post", search: "\"ask hn\" ipod"})

Geospatial Indexes

MongoDB incorporates a few kinds of geospatial indexes. the foremost usually used ones area unit 2dsphere, for surface-of-the-earth-type maps, and 2d, for flat maps (and statistic data). 2dsphere data allows you to specify points, lines, and polygons in GeoJSON format. A point is given by a two-element array, representing [longitude, latitude],

- {

- "name" : "New York City",

- "loc" : {

- "type" : "Point",

- "coordinates" : [50, 2]

- }

- }

A line is given by an array of points,

- {

- "name" : "Hudson River",

- "loc" : {

- "type" : "Line",

- "coordinates" : [[0,1], [0,2], [1,2]]

- }

- }

A polygon is specified the same way a line is (an array of points), but with a different "type",

- {

- "name" : "New England",

- "loc" : {

- "type" : "Polygon",

- "coordinates" : [[0,1], [0,2], [1,2]]

- }

- }

So, if we want to create a geospatial index using the "2dsphere" type then the command will be,

- db.world.ensureIndex({"loc" : "2dsphere"})

There are a unit many kinds of geospatial question that you just will perform: intersection, within, and closeness. To query, specify what you’re searching for as a GeoJSON object that appears like {"$geometry" : geoJsonDesc}.

As with different kinds of indexes, you'll mix geospatial indexes with different fields to optimize a lot of advanced queries. An attainable question mentioned higher than was: “What restaurants are within the East Village?” victimization solely a geospatial index, we tend to may slender the field to everything within the East Village, however narrowing it all the way down to solely “restaurants” or “pizza” would need another field within the index:

- db.open.street.map.ensureIndex({"tags" : 1, "location" : "2dsphere"})

Store data with GridFS

GridFS could be a mechanism for storing giant binary files in MongoDB. There are a unit many reasons why you would possibly think about using GridFS for file storage,

-

Victimization GridFS will modify your stack. If you’re already victimization MongoDB, you might be ready to use GridFS rather than a separate tool for file storage.

-

GridFS can leverage any existing replication or auto-sharding that you’ve come upon for MongoDB, thus obtaining failover and scale-out for file storage is less complicated.

-

GridFS will alleviate a number of the problems that bound filesystems will exhibit once being used to store user uploads. as an example, GridFS doesn't have problems with storing giant numbers of files within the same directory.

-

We will be able to get a nice disk neighborhood with GridFS, as a result of MongoDB allocates knowledge files in two GB chunks.

There are also some downsides, too,

-

Slower performance: accessing files from MongoDB won't be as quick as going directly through the filesystem.

-

You'll be able to solely modify documents by deleting them and resaving the total issue.

-

MongoDB stores files as multiple documents thus it cannot lock all of the chunks in a file at the constant time.

GridFS is mostly best after you have giant files you’ll be accessing during an ordered fashion that won’t be dynamical a lot of.

In this article, we discuss some advance concept of the index in MongoDB like Capped Collection, TTL Index, GridFS etc. These indexes some normally known as the Advance indexes which are mainly used for the database administration related work. In the next article, we will discuss aggregate functions in MongoDB.

Hope, this article will help you. Any feedback or query related to this article are most welcome.